实验报告:基于勒索病毒、机器学习和深度学习的恶意程序检测研究

实验报告:基于勒索病毒、机器学习和深度学习的恶意程序检测研究

实验背景

随着信息技术的迅猛发展,计算机病毒的种类和数量不断增加,给信息安全带来了严峻挑战。传统的病毒检测方法主要依赖特征码和启发式分析,但在应对新型和变种病毒时效果有限。机器学习和深度学习作为现代人工智能的重要分支,提供了新的解决方案。恶意程序是网络安全的重要威胁,其中勒索病毒尤为严重,通过加密受害者文件索取赎金。本实验分三个部分:

- 设计一个简单的勒索病毒Demo,了解恶意程序的基本行为。

- 利用机器学习方法检测恶意程序。

- 利用深度学习方法检测并分类恶意程序。

第一部分:勒索病毒Demo设计

实验目的

利用ChatGPT实现一个勒索病毒的简化模型,模拟文件加密与解密流程,探究恶意程序的行为特征。

技术实现

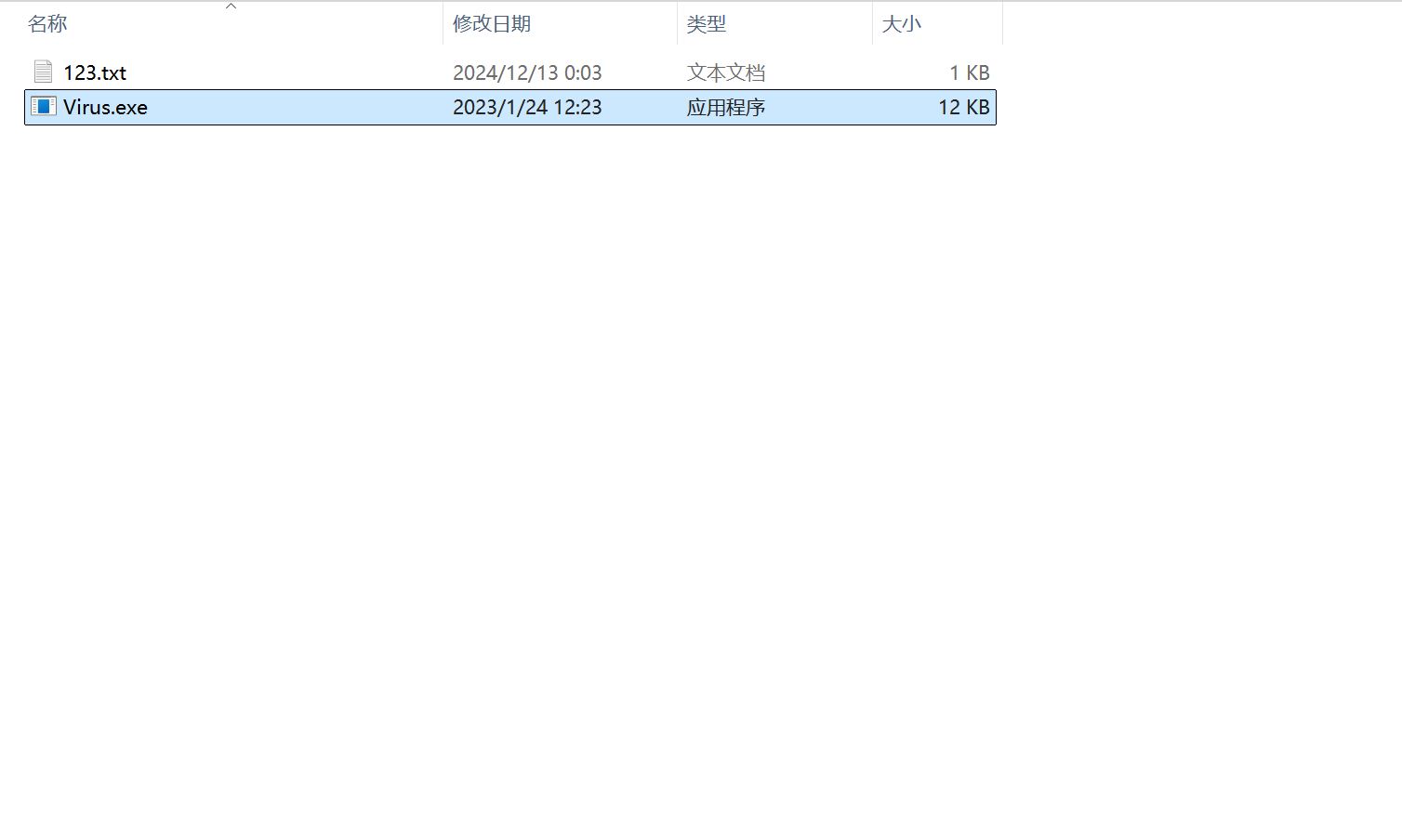

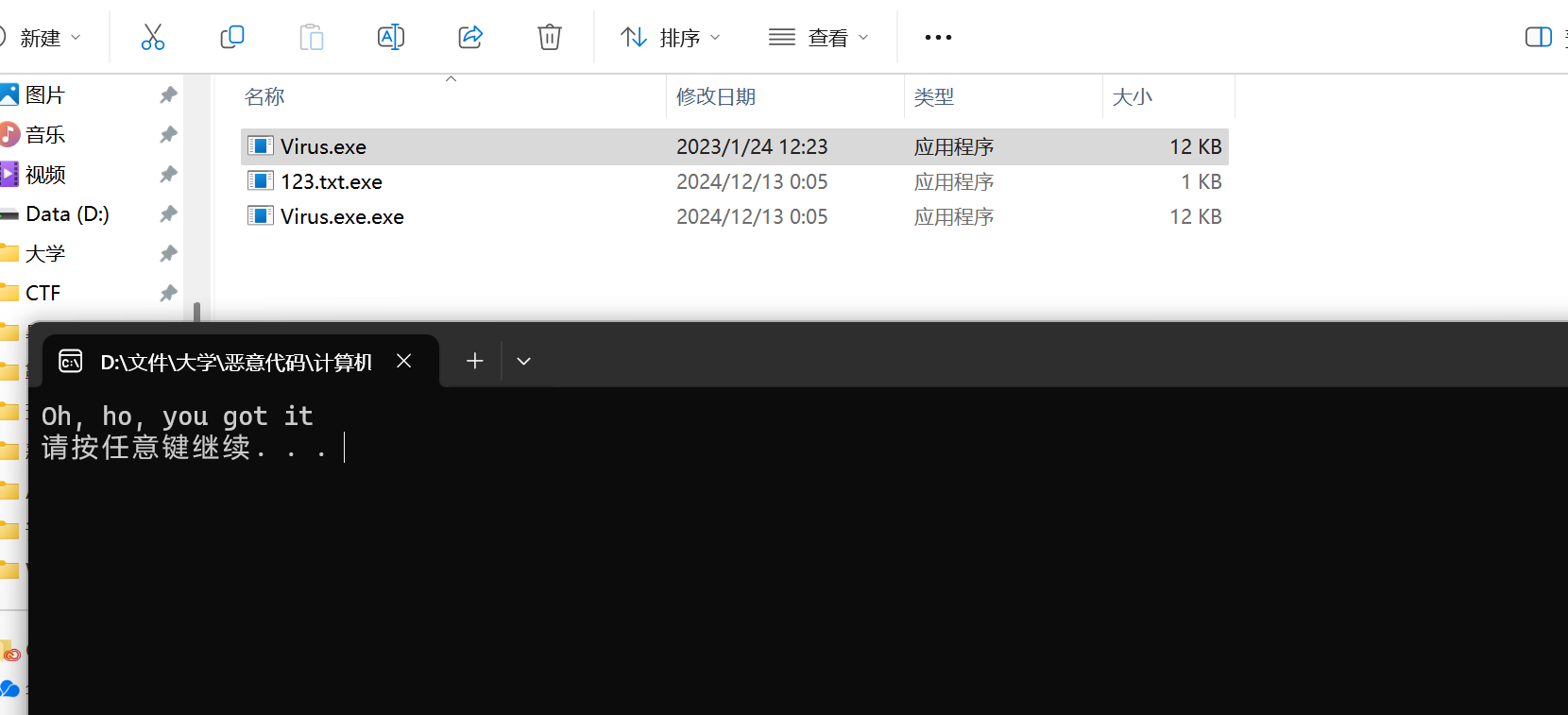

- 加密功能:

- 遍历当前目录及子目录中的所有文件。

- 使用简单的异或加密算法(

XOR)加密文件内容,并将加密后的文件扩展名改为.exe。 - 删除原始文件,保留加密后的文件。

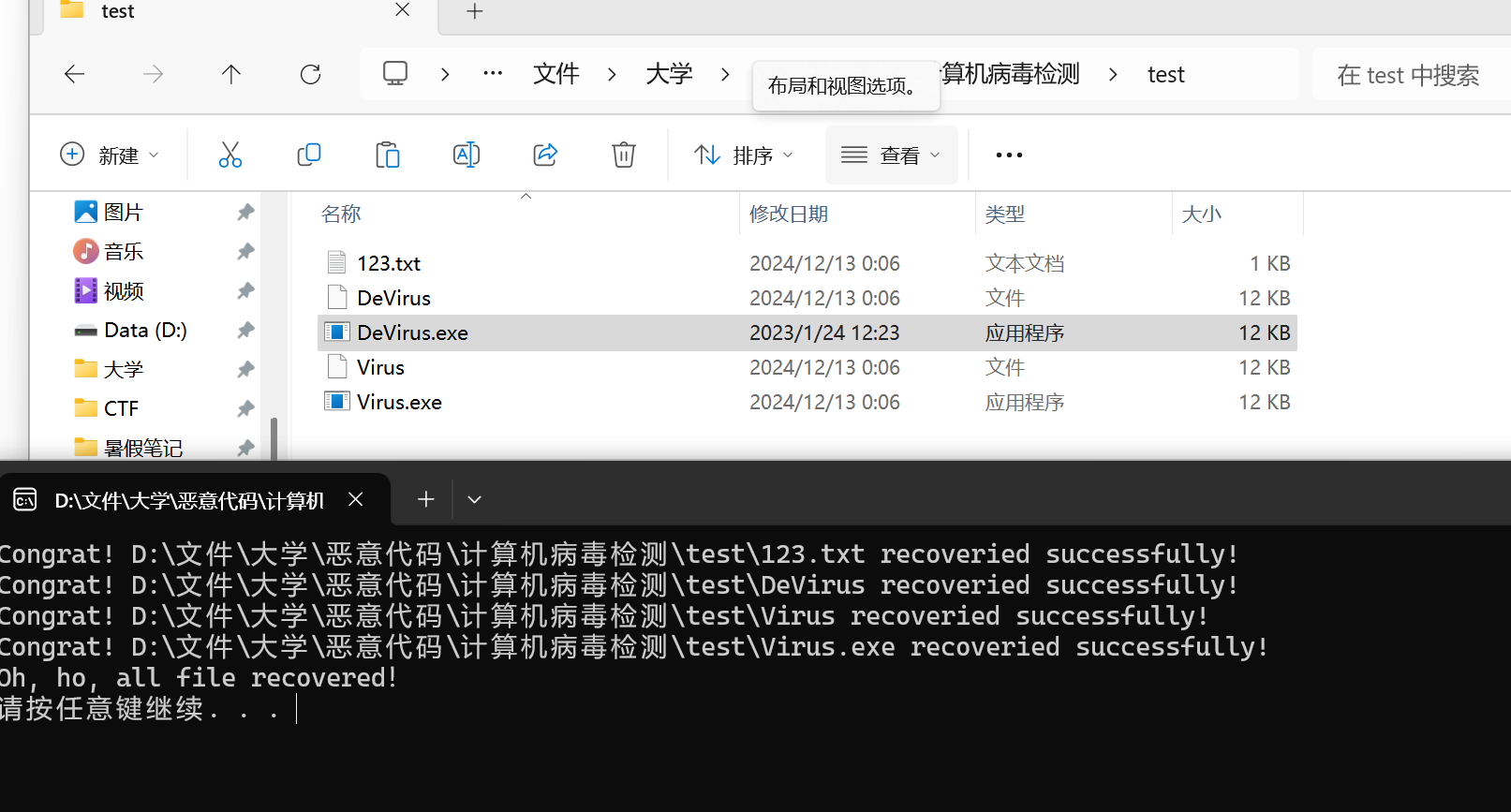

- 解密功能:

- 遍历加密文件,使用相同的

XOR解密逻辑还原原始文件。 - 删除加密文件,恢复文件原始状态。

- 遍历加密文件,使用相同的

代码逻辑

- 文件遍历:使用Windows API递归遍历文件夹。

- 文件操作:基于

FILE*文件指针读写文件。

安全注意事项

- 仅供学习使用,禁止用于任何非法目的。

所有操作应限制在“测试文件夹”中,避免误操作导致数据丢失。

encode-main.cpp:

1 | #include<stdio.h> |

encode-funcs.cpp:

1 | #include"funcs.h" |

encode-funcs.h:

1 | #pragma once |

decode-main.cpp:

1 | #include<stdio.h> |

decode-funcs.cpp:

1 | #include"funcs.h" |

decode-funcs.h:

1 | #pragma once |

第二部分:基于机器学习的恶意程序检测

恶意软件是一种被设计用来对目标计算机造成破坏或占用目标计算机资源的软件,包括蠕虫、木马、勒索病毒等。近年来,随着虚拟货币的流行,挖矿类恶意程序也大规模出现,严重侵害用户利益。通过结合机器学习和深度学习技术,能够有效提高恶意程序的检测率和泛化能力。

本实验以阿里云安全恶意程序检测竞赛提供的恶意程序检测数据集为基础,探索恶意程序检测的多种方法,包括:

- 模拟勒索病毒行为。

- 基于机器学习的恶意程序检测。

- 基于深度学习的恶意程序分类。

数据集介绍:

阿里云安全恶意程序检测数据集 (阿里云数据集)

本次实验使用的数据集由阿里云提供,包含从沙箱模拟运行后的Windows可执行程序API调用序列。数据总计约6亿条,包括多种恶意程序类型和正常文件的数据。数据集主要特点和内容如下:

1. 数据结构

| 字段名称 | 数据类型 | 解释 |

|---|---|---|

file_id |

bigint |

文件编号 |

label |

bigint |

文件标签:0(正常)、1(勒索病毒)、2(挖矿程序)、3(DDoS木马)、4(蠕虫病毒)、5(感染型病毒)、6(后门程序)、7(木马程序) |

api |

string |

文件调用的API名称 |

tid |

bigint |

调用API的线程编号 |

return_value |

string |

API返回值 |

index |

string |

API调用的顺序编号,在同线程中保证顺序,但不同线程之间无顺序关系 |

2. 数据规模

- 训练数据:约9000万次调用,文件1万多个(以文件编号汇总)。

- 测试数据:约8000万次调用,包含约1万个文件。

- 每个文件中的API调用可能超过5000条,超出部分已截断。

3. 数据预处理

- 数据均已脱敏,确保隐私和安全。

- 每条记录包含完整的调用上下文及线程信息,有助于模型捕获程序行为特征。

4. 评测指标

使用LogLoss(对数损失)作为评测指标:

$\text{logloss} = -\frac{1}{N} \sum{i=1}^N \sum{j=1}^M \left[ y{ij} \log(P{ij}) + (1 - y{ij}) \log(1 - P{ij}) \right]$

其中:

- $M$:表示分类数(总共6个类别)。

- $N$:表示测试集样本总数。

- $y_{ij}$:第$i$个样本是否属于第$j$类(是-1,否-0)。

- $P_{ij}$:第$i$个样本被预测为第$j$类的概率(如:prob0, prob1, prob2, prob3, prob4, prob5 ,prob6,prob7)。

实验方法

- 特征提取:

- 将恶意软件的数据格式化,主要包括API调用序列、线程信息等。

- 统一数据的API字段编码,使用LabelEncoder为API字段生成整数值,便于模型处理。

- 对API调用序列长度进行填充/截断(最大长度设为5000)。

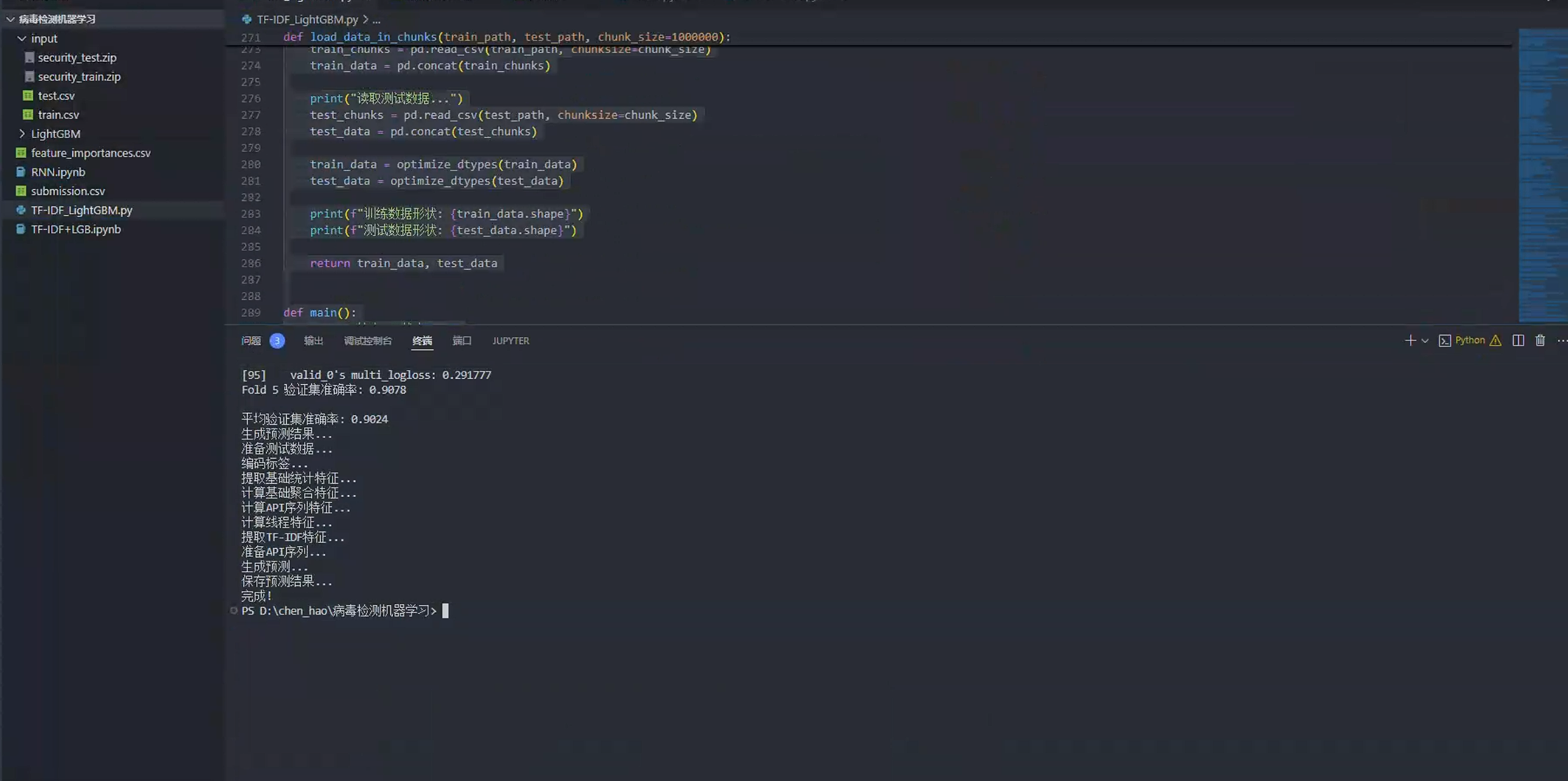

- 基于TF-IDF对API调用文本进行特征提取。

- 模型训练:

- 使用TF-IDF提取特征,结合LightGBM分类模型。

- TF-IDF使用一元和二元词组,特征数量限制为1000。

- 使用5折交叉验证评估模型性能。

实验结果

- 代码实现:

- 特征提取:基于TF-IDF的API序列向量化。

- 模型训练:使用LightGBM进行多分类任务。

- 性能表现:

- 平均验证集准确率:90.24%。

- 每折交叉验证的准确率分布:

- 折1:90.82%

- 折2:89.67%

- 折3:90.49%

- 折4:89.41%

- 折5:90.78%

- 特征贡献:

- TF-IDF特征对模型贡献显著,尤其是高频API组合。线程相关统计特征如调用次数、API分布等也显著提升了分类效果。

1 | PS D:\chen_hao\病毒检测机器学习> & D:/ProgramSoftware/anaconda3/python.exe d:/chen_hao/病毒检测机器学习/TF-IDF_LightGBM.py |

1 | import numpy as np |

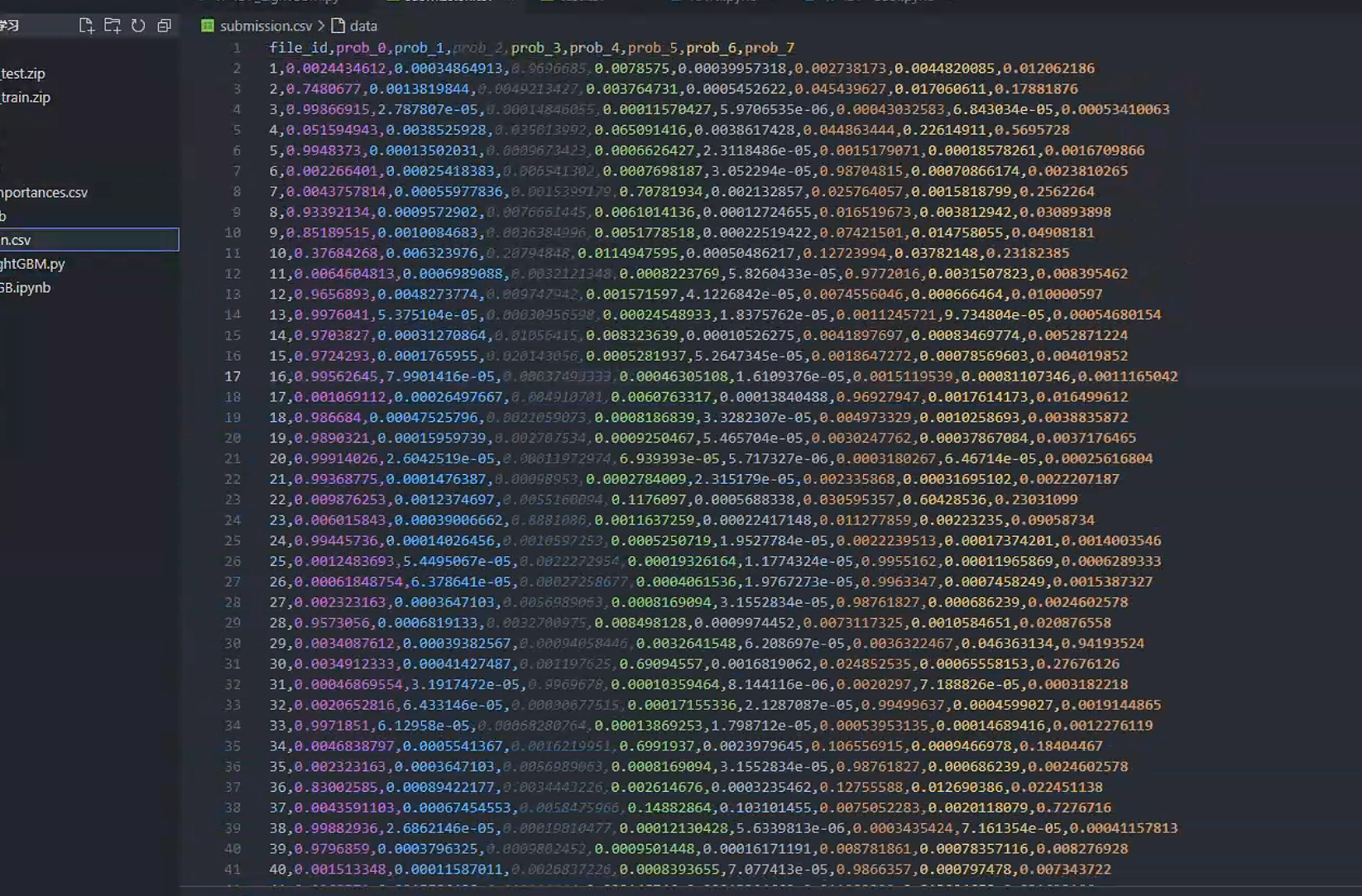

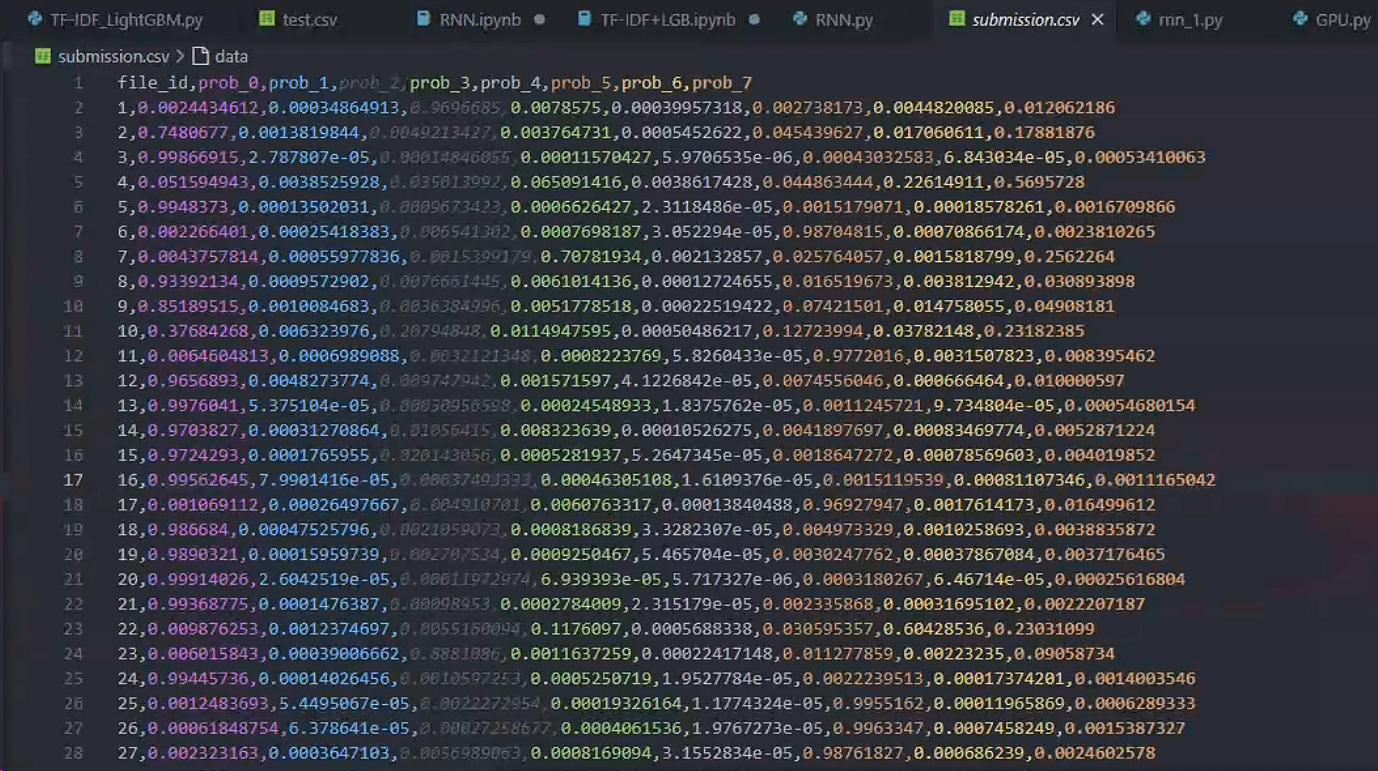

- 结果展示

1 | file_id,prob_0,prob_1,prob_2,prob_3,prob_4,prob_5,prob_6,prob_7 |

1 | import os |

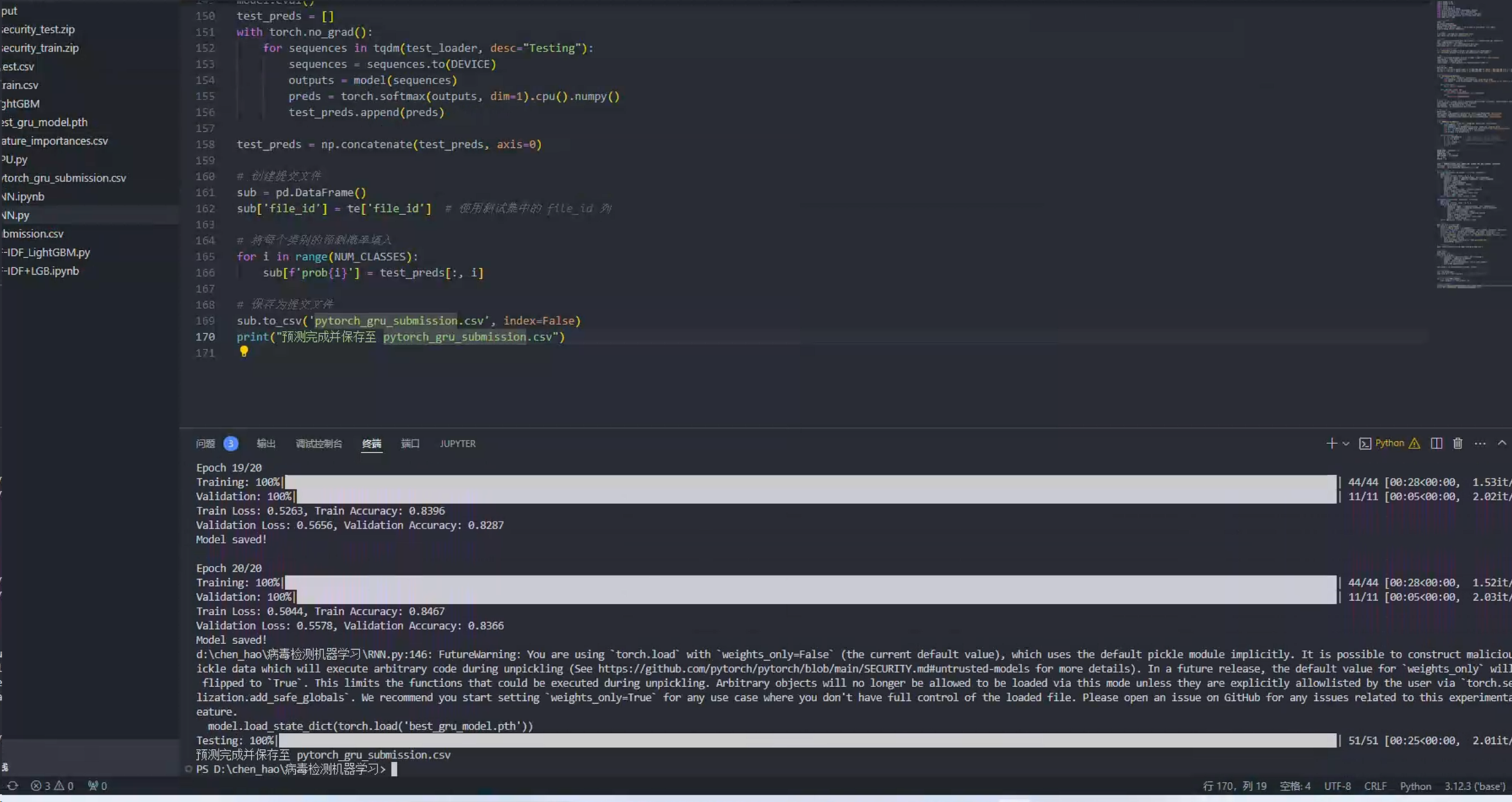

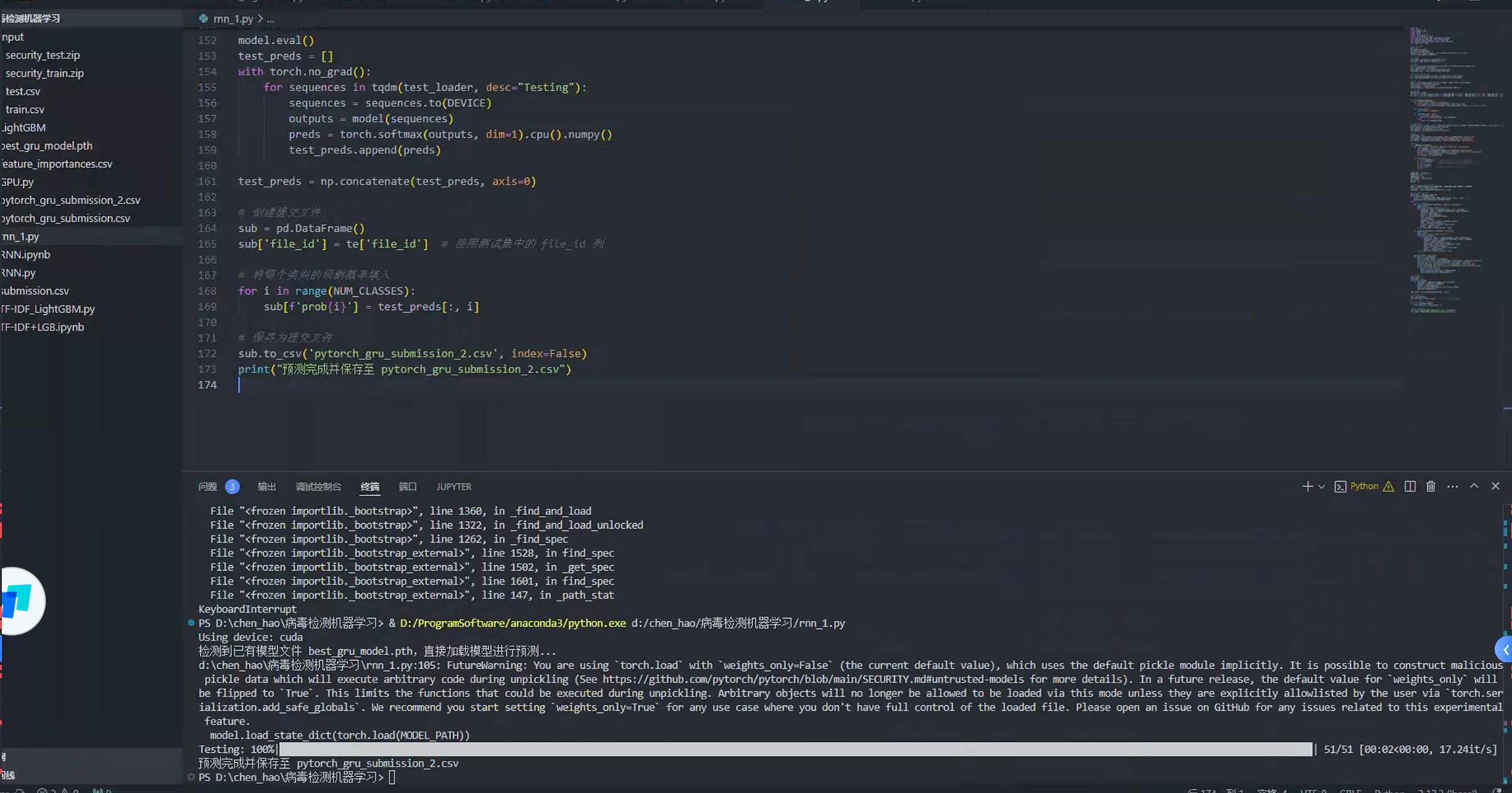

1 | PS D:\chen_hao\病毒检测机器学习> & D:/ProgramSoftware/anaconda3/python.exe d:/chen_hao/病毒检测机器学习/RNN.py |

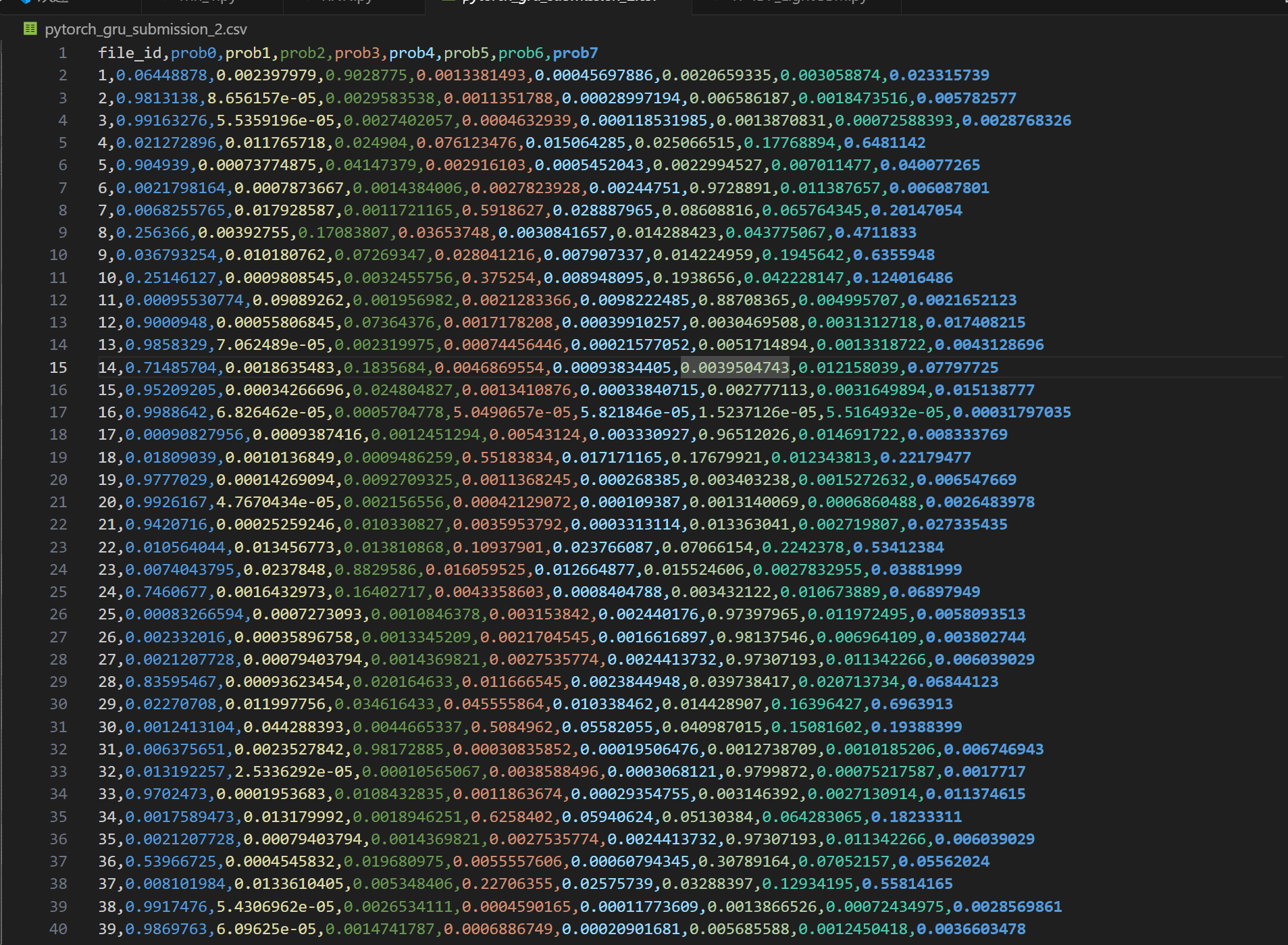

1 | file_id,prob0,prob1,prob2,prob3,prob4,prob5,prob6,prob7 |

总结与展望

- 实验结论:

- 勒索病毒:设计了一个简单的勒索病毒Demo,揭示其文件加密与解密原理。

- 机器学习:使用LightGBM结合TF-IDF特征,模型性能优秀,适合快速检测。

- 深度学习:GRU模型对复杂API序列分类效果更优,适合进一步研究。

- 优化方向:

- 引入更复杂的注意力机制,加强深度学习模型对序列数据的理解。

- 数据增强,扩展训练数据多样性,提升模型泛化能力。

- 结合机器学习和深度学习结果进行模型融合,进一步提升检测与分类性能。